运维层

7.1 dashboard配置

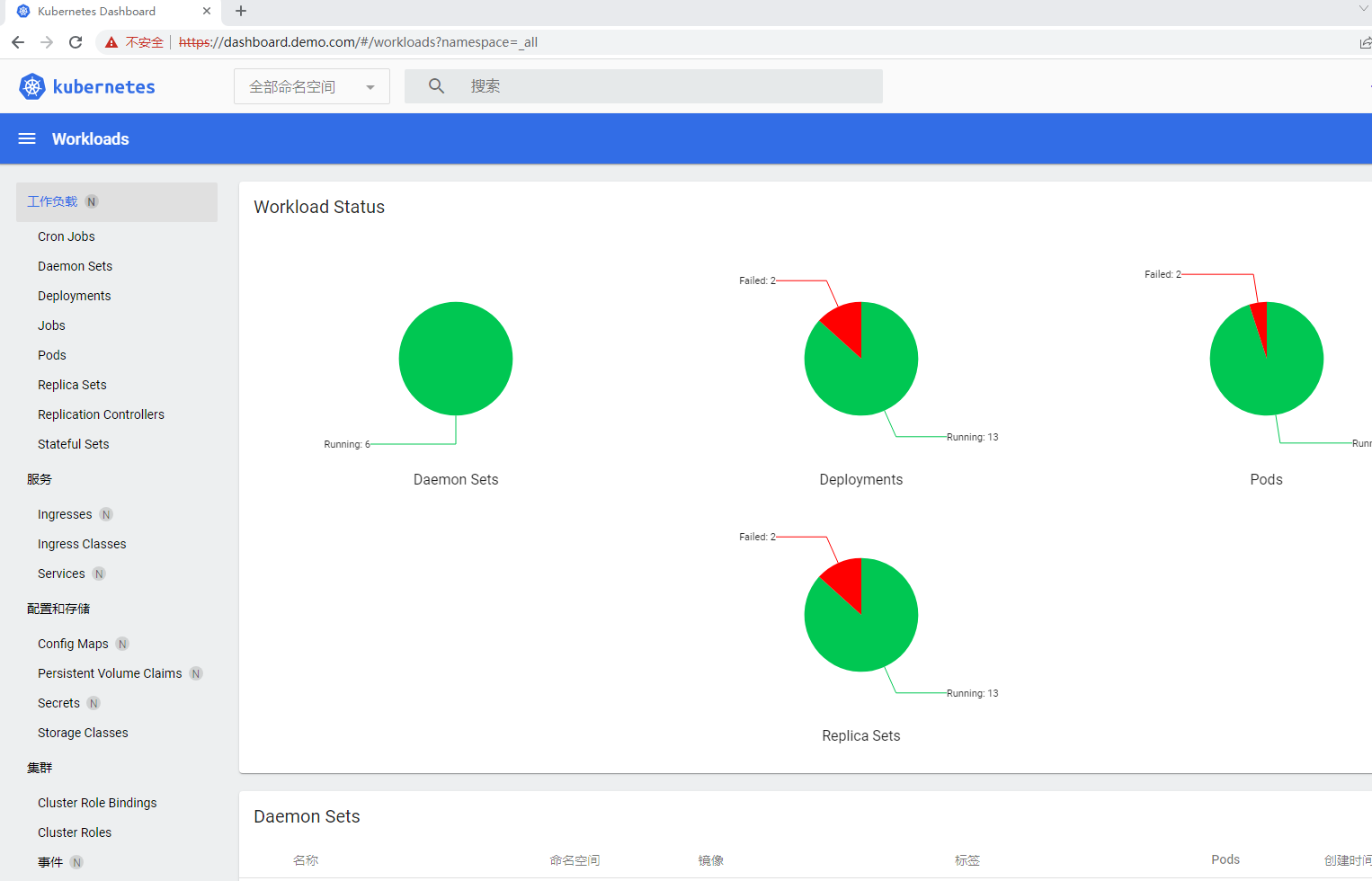

安装k8s官方提供的dashboard

# wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml -o dashboard.yaml

# cat dashboard.yaml | grep image:

image: kubernetesui/dashboard:v2.7.0

image: kubernetesui/metrics-scraper:v1.0.8

转存到私仓,并修改镜像地址,如下

# cat dashboard.yaml | grep image:

image: harbor.demo.com/k8s/dashboard:v2.7.0

image: harbor.demo.com/k8s/metrics-scraper:v1.0.8

配置svc采用LB方式

# vi dashboard.yaml

...

kind: Service

apiVersion: v1

metadata:

labels:

k8s-app: kubernetes-dashboard

name: kubernetes-dashboard

namespace: kubernetes-dashboard

spec:

ports:

- port: 443

targetPort: 8443

selector:

k8s-app: kubernetes-dashboard

type: LoadBalancer

...

# kubectl apply -f dashboard.yaml

# kubectl get all -n kubernetes-dashboard

NAME READY STATUS RESTARTS AGE

pod/dashboard-metrics-scraper-d97df5556-vvv9w 1/1 Running 0 16s

pod/kubernetes-dashboard-6694866798-pcttp 1/1 Running 0 16s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/dashboard-metrics-scraper ClusterIP 10.10.153.173 <none> 8000/TCP 17s

service/kubernetes-dashboard LoadBalancer 10.10.186.6 192.168.3.182 443:31107/TCP 18s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/dashboard-metrics-scraper 1/1 1 1 17s

deployment.apps/kubernetes-dashboard 1/1 1 1 17s

NAME DESIRED CURRENT READY AGE

replicaset.apps/dashboard-metrics-scraper-d97df5556 1 1 1 17s

replicaset.apps/kubernetes-dashboard-6694866798 1 1 1 17s

添加用户

# cat admin-user.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

# kubectl apply -f admin-user.yaml

# kubectl -n kubernetes-dashboard create token admin-user

eyJhbGciOiJSUzI1NiIsImtpZCI6IjlUNmROZTZZSEJ4WEJIell2OG5IQS1oTGVLYjJWRU9QRlhzUFBmdlVONU0ifQ.eyJhdWQiOlsiaHR0cHM6Ly9rdWJlcm5ldGVzLmRlZmF1bHQuc3ZjLmNsdXN0ZXIubG9jYWwiXSwiZXhwIjoxNjg2NTQ3MTE3LCJpYXQiOjE2ODY1NDM1MTcsImlzcyI6Imh0dHBzOi8va3ViZXJuZXRlcy5kZWZhdWx0LnN2Yy5jbHVzdGVyLmxvY2FsIiwia3ViZXJuZXRlcy5pbyI6eyJuYW1lc3BhY2UiOiJrdWJlcm5ldGVzLWRhc2hib2FyZCIsInNlcnZpY2VhY2NvdW50Ijp7Im5hbWUiOiJhZG1pbi11c2VyIiwidWlkIjoiNjk1MWFlODktODYwMi00NzAzLTk3NzYtMmNhNmU0OTJlZjQ2In19LCJuYmYiOjE2ODY1NDM1MTcsInN1YiI6InN5c3RlbTpzZXJ2aWNlYWNjb3VudDprdWJlcm5ldGVzLWRhc2hib2FyZDphZG1pbi11c2VyIn0.j9XHrznphuwv56hcSGRlcOxvzuGGbKEdPZB1r5jc84kNICp2sTwXvr71d6wdYtzGxjODZ81kTqVqRQUcUKi0Uh8OWjxWcspNJIWk0y6_Eub823YWzkusktb7NdqCb6BYIyX79V4iFUQaVjp9BlEXSZ6vnuJhwvEonumDrIo0JtUF8PT1ZV3319kajFTZMWza-QHRMFOjGC74YleMd-7gDA-aimoxjPQIVfIWF2PhssLj38Ci-KZddxOE1yE42QFOmPozOzCT348ZEJEO1lhDC4trnK2TTU8jb1sM7RyPKuvyY0fbimqNi6iGL-aqCaQT6_nWDvxkVycapJ3KAwz2Zw

# nslookup dashboard.demo.com

Server: 192.168.3.250

Address: 192.168.3.250#53

Name: dashboard.demo.com

Address: 192.168.3.182

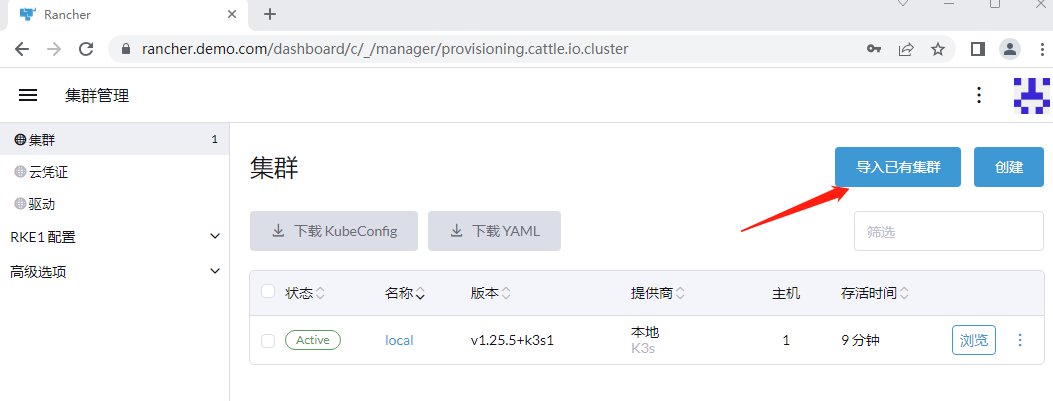

7.2 rancher

https://github.com/rancher/rancher

常用的k8s容器编排工具如openshift和rancher. 本文以rancher为例。rancher可以同时管理多个k8s集群。

rancher版本

latest 当前最新版(本文测试时其版本为v2.7.3)

stable 当前稳定版(本文测试时其版本为v2.6.12)

7.2.1 rancher节点安装

安装docker

yum -y install yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

yum -y install docker-ce docker-ce-cli containerd.io

systemctl enable docker containerd

systemctl start docker containerd

rancher镜像拉取(比较大,建议拉取后转存私仓)

# docker pull rancher/rancher:latest

创建rancher节点目录

mkdir -p /opt/racher

7.2.1.1 rancher-web被访问时的证书配置

将域名证书复制到/opt/racher/ca

将证书等改名,如下:

ca.pem ----> cacerts.pem

web-key.pem ---> key.pem

web.pem ---> cert.pem

# tree /opt/rancher/ca

/opt/rancher/ca

├── cacerts.pem

├── cert.pem

└── key.pem

7.2.1.2 私仓配置

# cat /opt/rancher/registries.yaml

mirrors:

harbor.demo.com:

endpoint:

- "harbor.demo.com"

configs:

"harbor.demo.com":

auth:

username: admin

password: 12qwaszx+pp

tls:

ca_file: /opt/harbor/ca.crt

cert_file: /opt/harbor/harbor.demo.com.cert

key_file: /opt/harbor/harbor.demo.com.key

其中的 /opt/harbor/ 目录是rancher节点运行时其容器内部的目录。

私仓库被访问时需使用的证书

# tree /opt/rancher/ca_harbor/

/opt/rancher/ca_harbor/

├── ca.crt

├── harbor.demo.com.cert

└── harbor.demo.com.key

在启动rancher时,需将 /opt/rancher/ca_harbor/ 映射到容器的 /opt/harbor/ 目录(在registries-https.yaml中已指定该目录)

7.2.1.3 安装rancher节点

# docker run -d -it -p 80:80 -p 443:443 --name rancher --privileged=true --restart=unless-stopped \

-v /opt/rancher/k8s:/var/lib/rancher \

-v /opt/rancher/ca:/etc/rancher/ssl \

-e SSL_CERT_DIR="/etc/rancher/ssl" \

-e CATTLE_SYSTEM_DEFAULT_REGISTRY=harbor.demo.com \

-v /opt/rancher/registries.yaml:/etc/rancher/k3s/registries.yaml \

-v /opt/rancher/ca_harbor:/opt/harbor \

rancher/rancher:latest

查看启动日志

# docker logs rancher -f

7.2.1.4 访问rancher web

# nslookup rancher.demo.com

Server: 192.168.3.250

Address: 192.168.3.250#53

Name: rancher.demo.com

Address: 10.2.20.151

查看默认admin用户密码

# docker exec -it rancher kubectl get secret --namespace cattle-system bootstrap-secret -o go-template='{{.data.bootstrapPassword|base64decode}}{{"\n"}}'

若忘记密码,则重设密码

# docker exec -it rancher reset-password

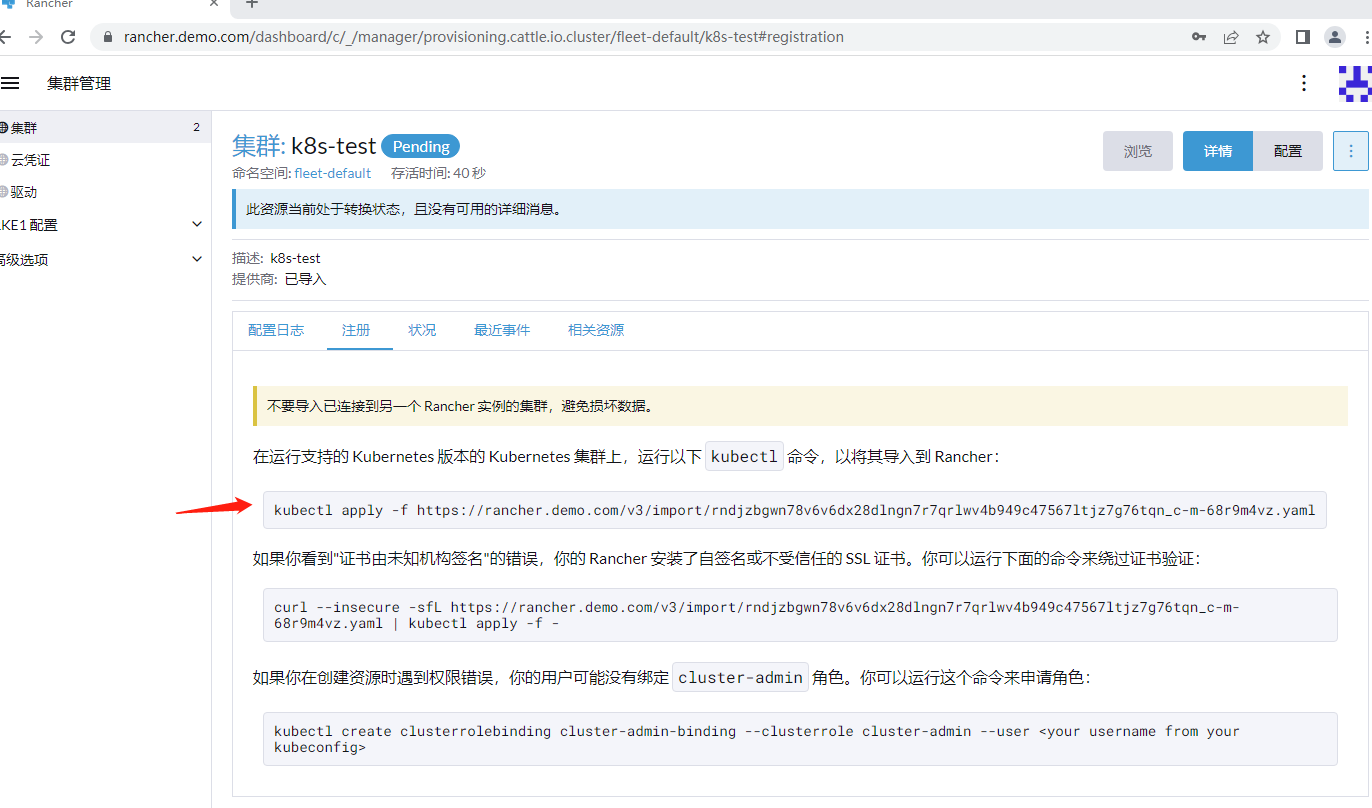

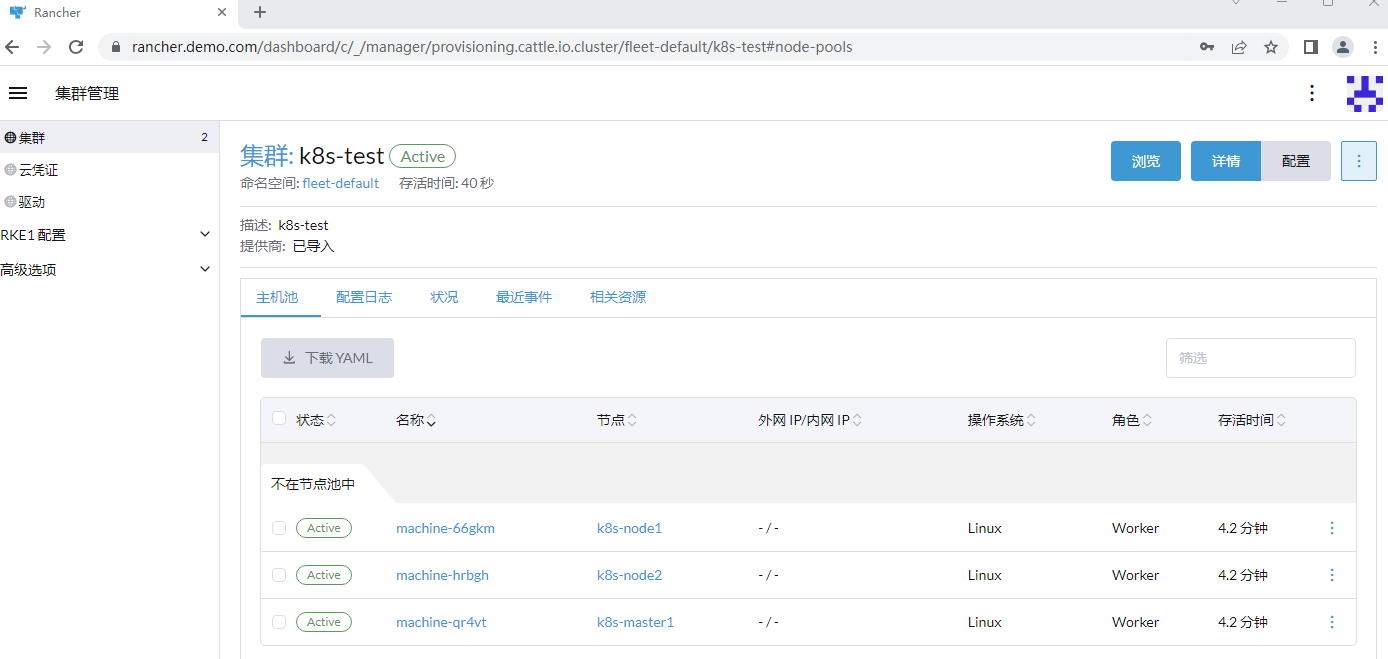

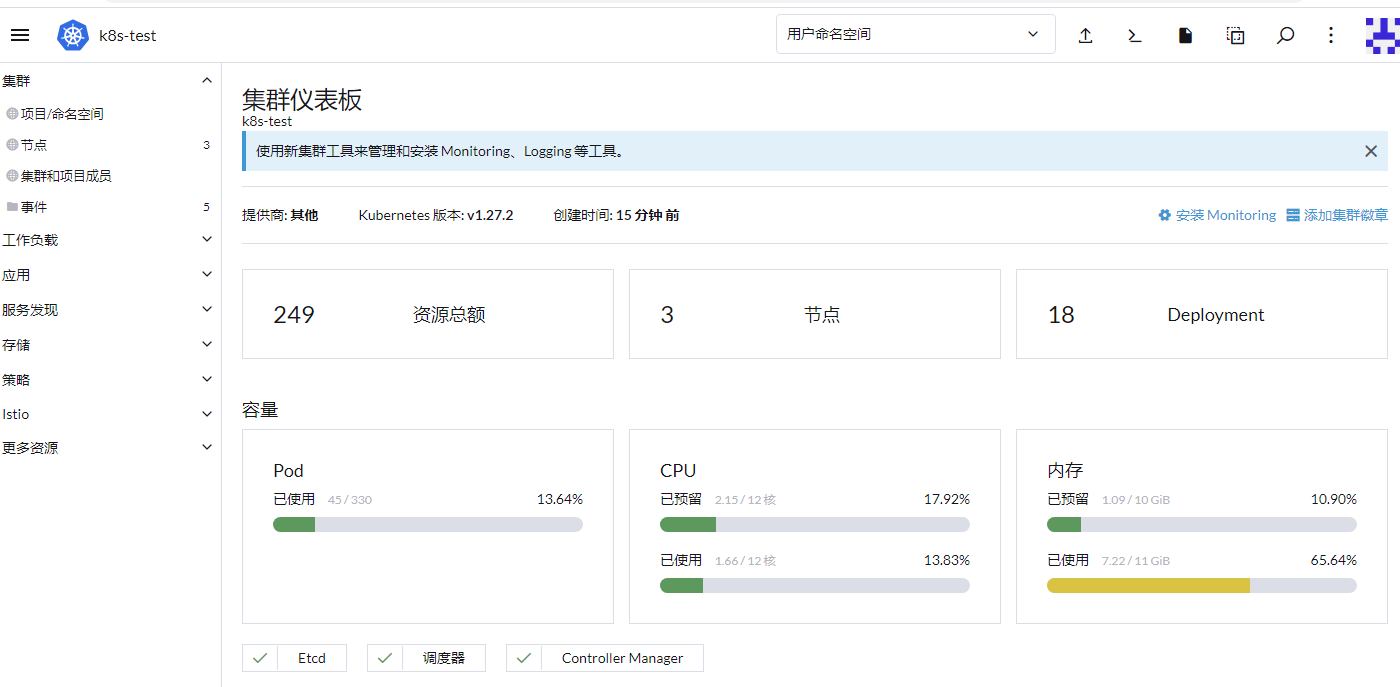

7.2.2 添加外部k8s集群

# curl --insecure -sfL https://rancher.demo.com/v3/import/rndjzbgwn78v6v6dx28dlngn7r7qrlwv4b949c47567ltjz7g76tqn_c-m-68r9m4vz.yaml -o rancher.yaml

# cat rancher.yaml | grep image:

image: rancher/rancher-agent:v2.7.3

改为私仓地址

# cat rancher.yaml | grep image:

image: harbor.demo.com/rancher/rancher-agent:v2.7.3

安装

# kubectl apply -f rancher.yaml

查看

# kubectl -n cattle-system get all

NAME READY STATUS RESTARTS AGE

pod/cattle-cluster-agent-5cb7bb7b9b-kc5fn 0/1 ContainerCreating 0 27s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cattle-cluster-agent ClusterIP 10.10.104.246 <none> 80/TCP,443/TCP 27s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cattle-cluster-agent 0/1 1 0 28s

NAME DESIRED CURRENT READY AGE

replicaset.apps/cattle-cluster-agent-5cb7bb7b9b 1 1 0 28s

查看某个pod

查看某个pod

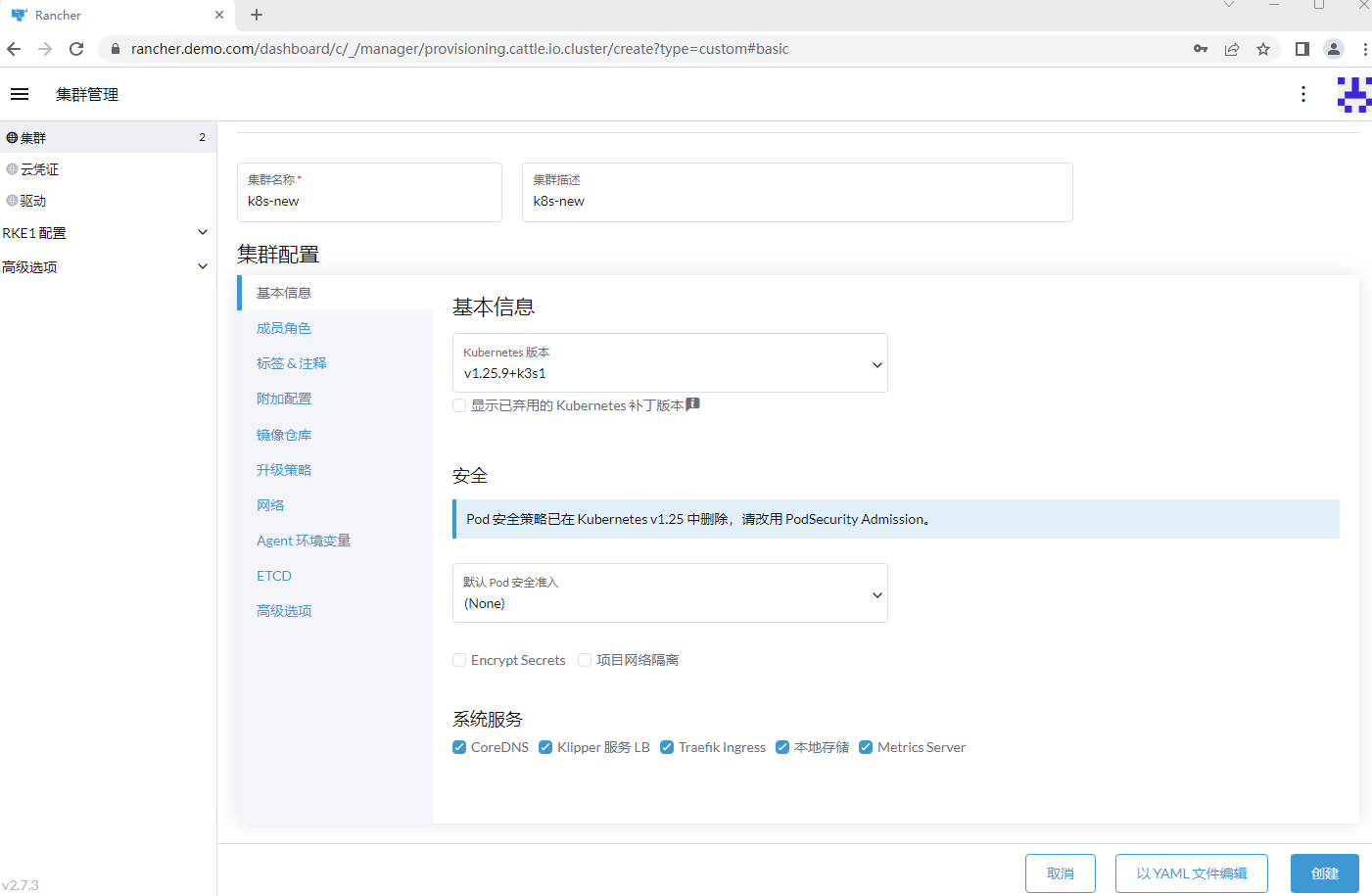

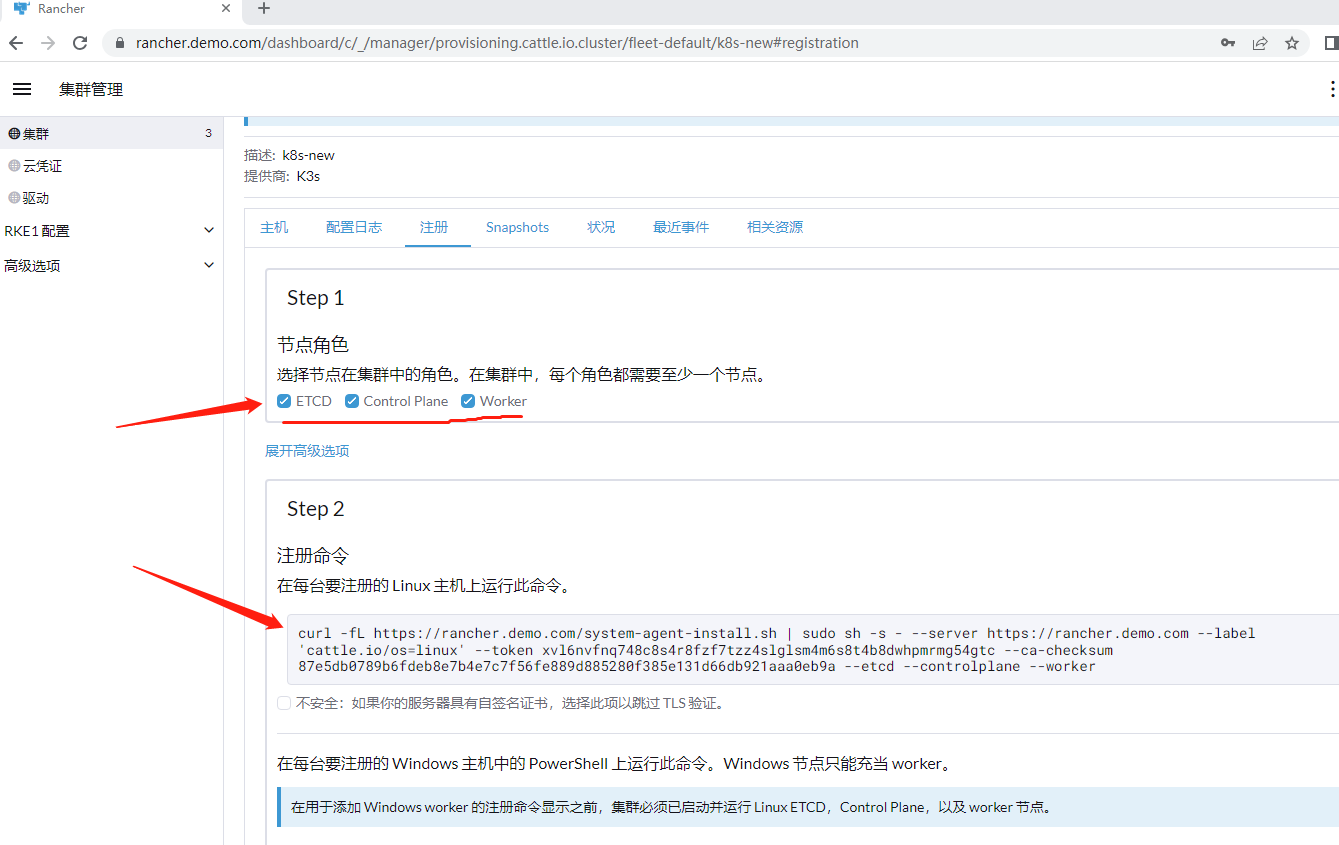

7.2.3 新增k8s集群

使用rancher建立新的k8s集群较简单,在目标节点上直接运行相应命令即可。

7.3 prometheus/grafana

https://github.com/prometheus/prometheus/

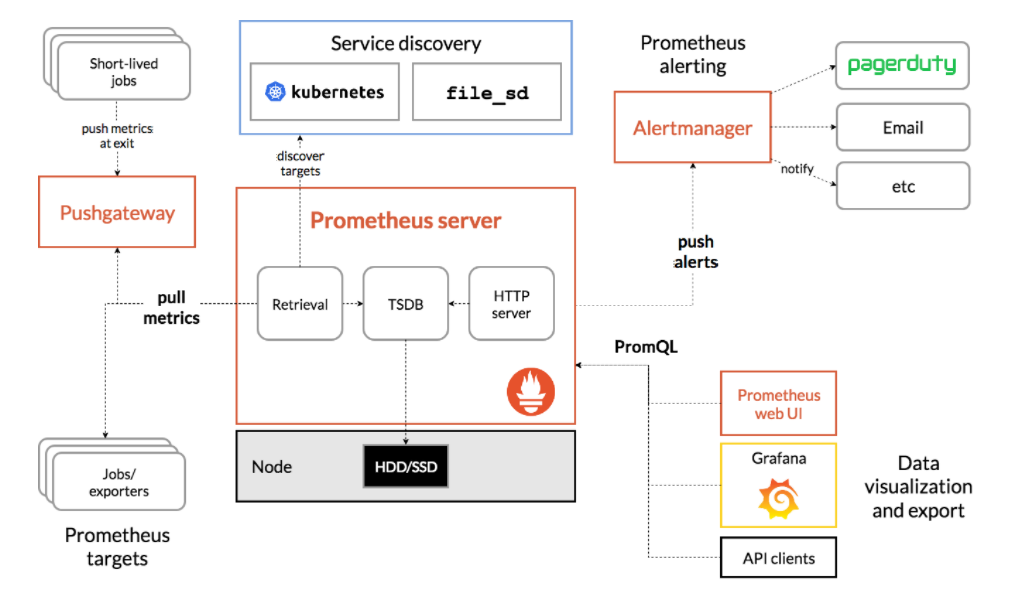

Prometheus是一个开源的系统监控和报警系统,是当前一套非常流行的开源监控和报警系统之一,CNCF托管的项目,在kubernetes容器管理系统中,通常会搭配prometheus进行监控,Prometheus性能足够支撑上万台规模的集群。

prometheus显著特点

prometheus显著特点

- 多维数据模型(时间序列由metrics指标名字和设置key/value键/值的labels构成),高效的存储

- 灵活的查询语言(PromQL)

- 采用http协议,使用pull模式,拉取数据

- 通过中间网关支持推送。

- 丰富多样的Metrics采样器exporter。

- 与Grafana完美结合,由Grafana提供数据可视化能力。

Grafana 是一个用于可视化大型测量数据的开源系统,它的功能非常强大,界面也非常漂亮,使用它可以创建自定义的控制面板,你可以在面板中配置要显示的数据和显示方式,有大量第三方可视插件可使用。

Prometheus/Grafana支持多种方式安装,如源码、二进制、镜像等方式,都比较简单。

本文以kube-prometheus方式在k8s上安装Prometheus/Grafana.

官方安装文档: https://prometheus-operator.dev/docs/prologue/quick-start/

安装要求: https://github.com/prometheus-operator/kube-prometheus#compatibility

官方Github地址: https://github.com/prometheus-operator/kube-prometheus

7.3.1 kube-prometheus安装

下载

# git clone https://github.com/coreos/kube-prometheus.git

# cd kube-prometheus/manifests

查看所需镜像,并转存到私仓,同时修改清单文件中的镜像地址改为私仓

# find ./ | xargs grep image:

# cat prometheusOperator-deployment.yaml | grep prometheus-config-reloader

- --prometheus-config-reloader=quay.io/prometheus-operator/prometheus-config-reloader:v0.65.2

更改prometheusAdapter-clusterRoleAggregatedMetricsReader.yaml

# cat prometheusAdapter-clusterRoleAggregatedMetricsReader.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: metrics-adapter

app.kubernetes.io/name: prometheus-adapter

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 0.10.0

rbac.authorization.k8s.io/aggregate-to-admin: "true"

rbac.authorization.k8s.io/aggregate-to-edit: "true"

rbac.authorization.k8s.io/aggregate-to-view: "true"

name: system:aggregated-metrics-reader

#namespace: monitoring

rules:

- apiGroups:

- metrics.k8s.io

resources:

- services

- endpoints

- pods

- nodes

verbs:

- get

- list

- watch

提示:

本配置定义的资源在安装metrics-reader时已提供,此时只需更新一下配置即可。

更改prometheus-clusterRole.yaml

下面的更改,参照istio中提供的prometheus配置

# cat prometheus-clusterRole.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

app.kubernetes.io/component: prometheus

app.kubernetes.io/instance: k8s

app.kubernetes.io/name: prometheus

app.kubernetes.io/part-of: kube-prometheus

app.kubernetes.io/version: 2.44.0

name: prometheus-k8s

rules:

- apiGroups:

- ""

resources:

- nodes

- nodes/proxy

- nodes/metrics

- services

- endpoints

- pods

- ingresses

- configmaps

verbs:

- get

- list

- watch

- apiGroups:

- "extensions"

- "networking.k8s.io"

resources:

- ingresses/status

- ingresses

verbs:

- get

- list

- watch

- nonResourceURLs:

- "/metrics"

verbs:

- get

说明:

若采用kube-promethenus提供的配置,则在创建ServiceMonitor时不会被prometheus识别。

安装

# kubectl create -f setup/

# kubectl apply -f ./prometheusAdapter-clusterRoleAggregatedMetricsReader.yaml

# rm -f prometheusAdapter-clusterRoleAggregatedMetricsReader.yaml

# kubectl create -f ./

查看svc

# kubectl -n monitoring get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

alertmanager-main ClusterIP 10.10.251.51 <none> 9093/TCP,8080/TCP 7m18s

alertmanager-operated ClusterIP None <none> 9093/TCP,9094/TCP,9094/UDP 6m51s

blackbox-exporter ClusterIP 10.10.195.115 <none> 9115/TCP,19115/TCP 7m17s

grafana ClusterIP 10.10.121.183 <none> 3000/TCP 7m13s

kube-state-metrics ClusterIP None <none> 8443/TCP,9443/TCP 7m12s

node-exporter ClusterIP None <none> 9100/TCP 7m10s

prometheus-k8s ClusterIP 10.10.230.211 <none> 9090/TCP,8080/TCP 7m9s

prometheus-operated ClusterIP None <none> 9090/TCP 6m48s

prometheus-operator ClusterIP None <none> 8443/TCP 7m8s

域名解析

prometheus.demo.com 192.168.3.180

grafana.demo.com 192.168.3.180

alert.demo.com 192.168.3.180

对外开放服务

# cat open-ui.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: monitor-prometheus

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: prometheus.demo.com

http:

paths:

- backend:

service:

name: prometheus-k8s

port:

number: 9090

path: /

pathType: Prefix

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: monitor-grafana

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: grafana.demo.com

http:

paths:

- backend:

service:

name: grafana

port:

number: 3000

path: /

pathType: Prefix

---

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: monitor-alert

namespace: monitoring

spec:

ingressClassName: nginx

rules:

- host: alert.demo.com

http:

paths:

- backend:

service:

name: alertmanager-main

port:

number: 9093

path: /

pathType: Prefix

# kubectl apply -f open-ui.yaml

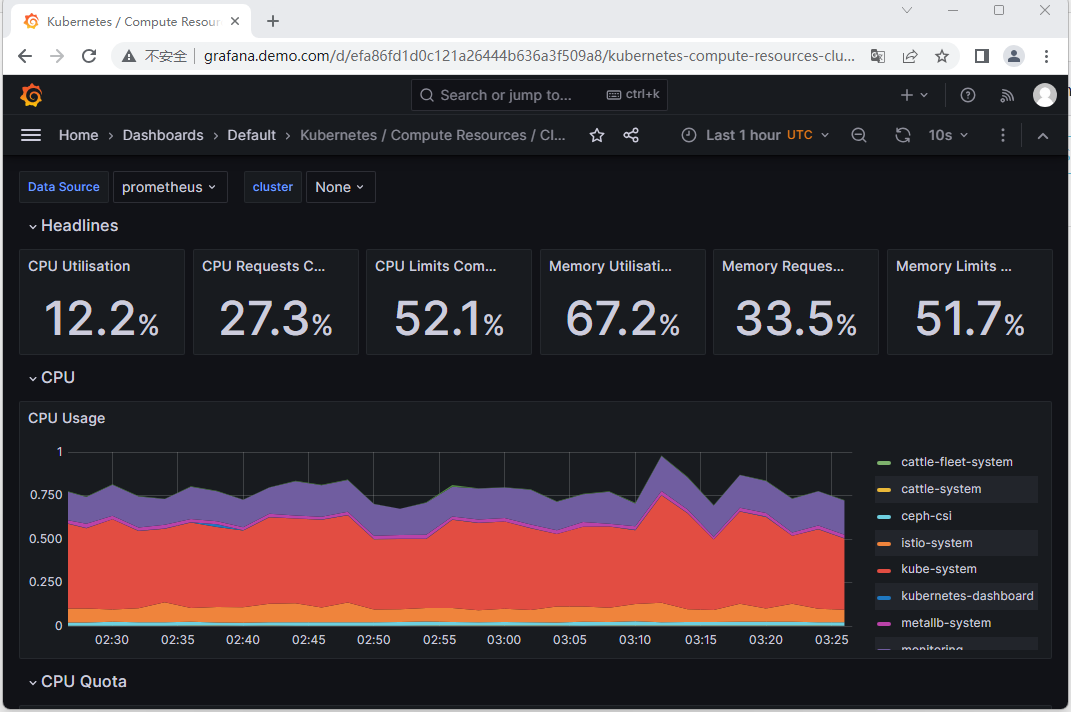

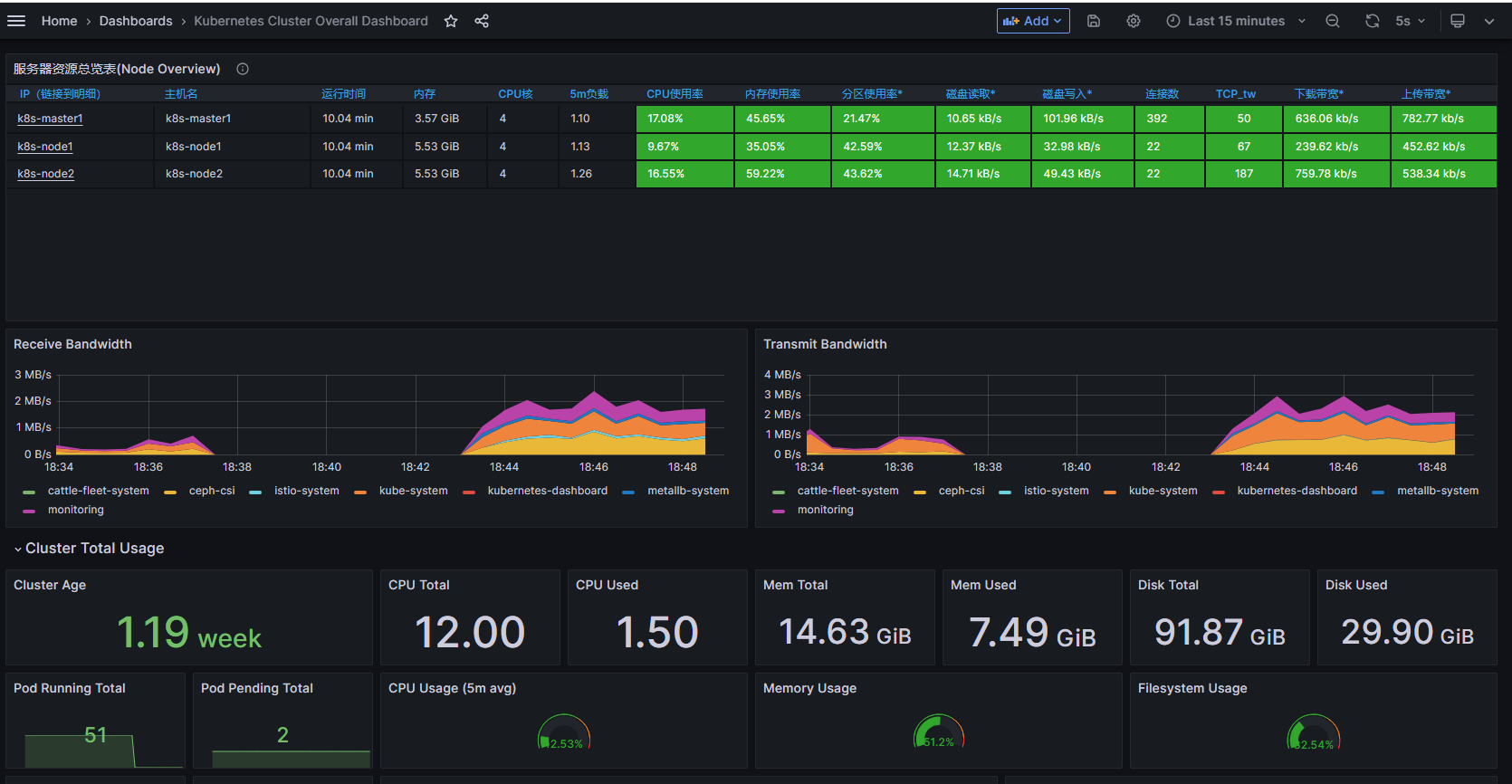

访问 http://grafana.demo.com (默认用户名admin,密码admin)

kube-prometheus已配置一些模板,如:

可以从 https://grafana.com/grafana/dashboards 找到所需模板,如14518模板

可以从 https://grafana.com/grafana/dashboards 找到所需模板,如14518模板

7.3.2 应用配置样例

ServiceMonitor 自定义资源(CRD)能够声明如何监控一组动态服务的定义。它使用标签选择定义一组需要被监控的服务。这样就允许组织引入如何暴露 metrics 的规定,只要符合这些规定新服务就会被发现列入监控,而不需要重新配置系统。

Prometheus就是通过ServiceMonitor提供的metrics数据接口把我们数据pull过来的。ServiceMonitor 通过label标签和对应的 endpoint 和 svc 进行关联。

查看kube-prometheus自带的ServiceMonitor

# kubectl -n monitoring get ServiceMonitor

NAME AGE

alertmanager-main 96m

app-test-ext-test 91s

blackbox-exporter 96m

coredns 96m

grafana 96m

kube-apiserver 96m

kube-controller-manager 96m

kube-scheduler 96m

kube-state-metrics 96m

kubelet 96m

node-exporter 96m

prometheus-k8s 96m

prometheus-operator 96m

7.3.2.1 ceph Metrics(集群外部ceph)

启动ceph exporter的监听端口

# ceph mgr module enable prometheus

# ss -lntp | grep mgr

LISTEN 0 128 10.2.20.90:6802 0.0.0.0:* users:(("ceph-mgr",pid=1108,fd=27))

LISTEN 0 128 10.2.20.90:6803 0.0.0.0:* users:(("ceph-mgr",pid=1108,fd=28))

LISTEN 0 5 *:8443 *:* users:(("ceph-mgr",pid=1108,fd=47))

LISTEN 0 5 *:9283 *:* users:(("ceph-mgr",pid=1108,fd=38)) //其中9283是ceph exporter的监听端口

查看

http://10.2.20.90:9283/metrics

创建svc和endpoints

# cat ext-ceph.yaml

apiVersion: v1

kind: Service

metadata:

labels:

app: app-test-ext-ceph

name: app-test-ext-ceph

namespace: test

spec:

ports:

- name: ceph-metrics

port: 9283

targetPort: 9283

protocol: TCP

---

apiVersion: v1

kind: Endpoints

metadata:

name: app-test-ext-ceph

namespace: test

labels:

app: app-test-ext-ceph

subsets:

- addresses:

- ip: 10.2.20.90

ports:

- name: ceph-metrics

port: 9283

创建ServiceMonitor

# cat sm.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: app-test-ext-ceph

name: app-test-ext-ceph

namespace: test

spec:

jobLabel: app-test-ext-ceph

endpoints:

- interval: 10s

port: ceph-metrics

path: /metrics

scheme: http

selector:

matchLabels:

app: app-test-ext-ceph

namespaceSelector:

matchNames:

- test

# kubectl apply -f ext-ceph.yaml -f sm.yaml

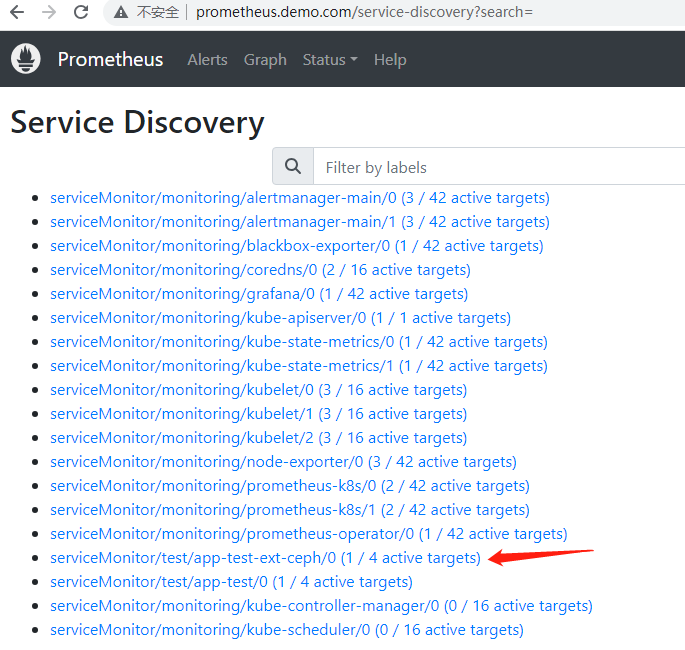

在prometheus中可以发现添加的ServiceMonitor已被识别。

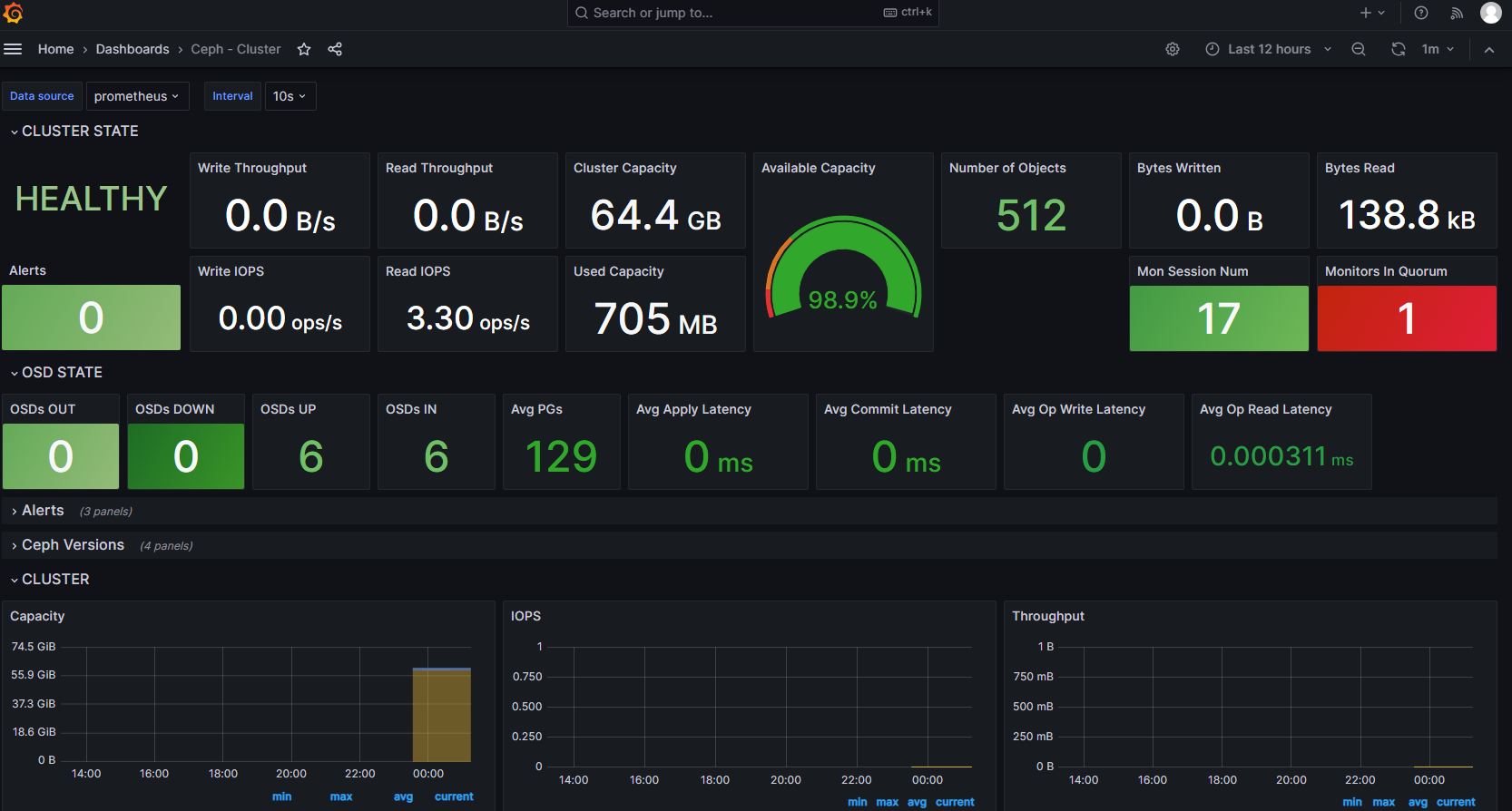

在grafana添加ceph模板2842,例如:

7.3.2.2 istio边车容器metrics(自动发现)

istio的边车容器默认要用15020作为metrics采集端口。

配置svc对像

# cat sv.yaml

apiVersion: v1

kind: Service

metadata:

labels:

sidecar-metrice: test

name: app-test-15020

namespace: test

spec:

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

selector:

security.istio.io/tlsMode: istio //匹对启用边车代理的容器。

ports:

- name: istio-sidecar

port: 15020

targetPort: 15020

protocol: TCP

type: ClusterIP

创建sm

# cat sm-test.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: app-test-sm

name: app-test-sm-istio-sidecar

namespace: test

spec:

jobLabel: app.kubernetes.io/name

endpoints:

- interval: 5s

port: istio-sidecar

path: /metrics

scheme: http

selector:

matchLabels:

sidecar-metrice: test //匹对带有“sidecar-metrice: test”标签的svc/endpoint

namespaceSelector:

matchNames:

- test

在prometheus中可以发现添加的ServiceMonitor已被识别。

7.3.2.3 nginx ingress Metrics

修改nginx ingress的pod模板,�打开metrics。

# vi ingress/nginx/kubernetes-ingress-main/deployments/deployment/nginx-ingress.yaml

...

ports:

- name: http

containerPort: 80

- name: https

containerPort: 443

- name: readiness-port

containerPort: 8081

- name: prometheus

containerPort: 9113

...

args:

- -nginx-configmaps=$(POD_NAMESPACE)/nginx-config

#- -default-server-tls-secret=$(POD_NAMESPACE)/default-server-secret

#- -include-year

#- -enable-cert-manager

#- -enable-external-dns

#- -v=3 # Enables extensive logging. Useful for troubleshooting.

#- -report-ingress-status

#- -external-service=nginx-ingress

- -enable-prometheus-metrics #取消注解。

...

# kubectl apply -f ingress/nginx/kubernetes-ingress-main/deployments/deployment/nginx-ingress.yaml

创建针对metrics的服务

# cat sv.yaml

apiVersion: v1

kind: Service

metadata:

labels:

nginx-metrice: test

name: app-test-9113

namespace: nginx-ingress

spec:

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

selector:

app: nginx-ingress

ports:

- name: prometheus

port: 9113

targetPort: 9113

protocol: TCP

type: ClusterIP

创建sm

# cat sm.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: app-test-nginx-ingress

name: app-test-nginx-ingress

namespace: nginx-ingress

spec:

jobLabel: app.kubernetes.io/name

endpoints:

- interval: 10s

port: prometheus

path: /metrics

scheme: http

selector:

matchLabels:

nginx-metrice: test

namespaceSelector:

matchNames:

- nginx-ingress

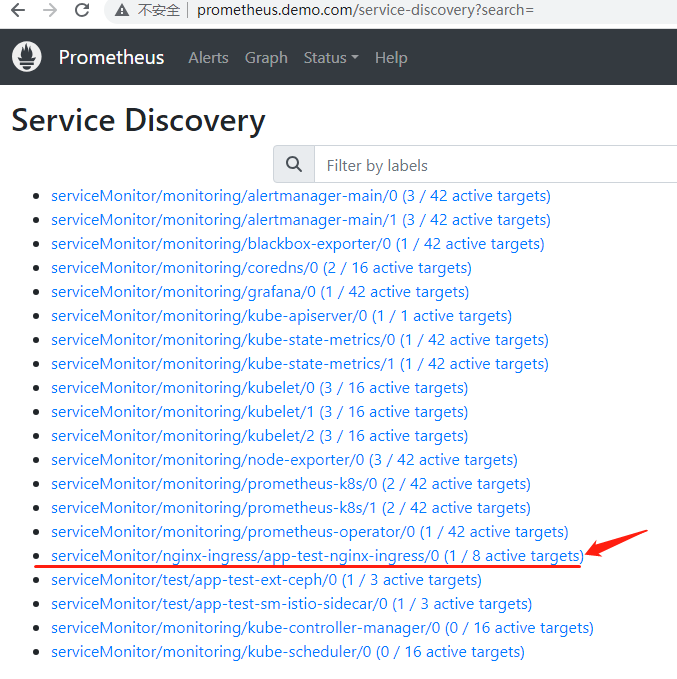

则在prometheus中可以发现添加的ServiceMonitor已被识别。

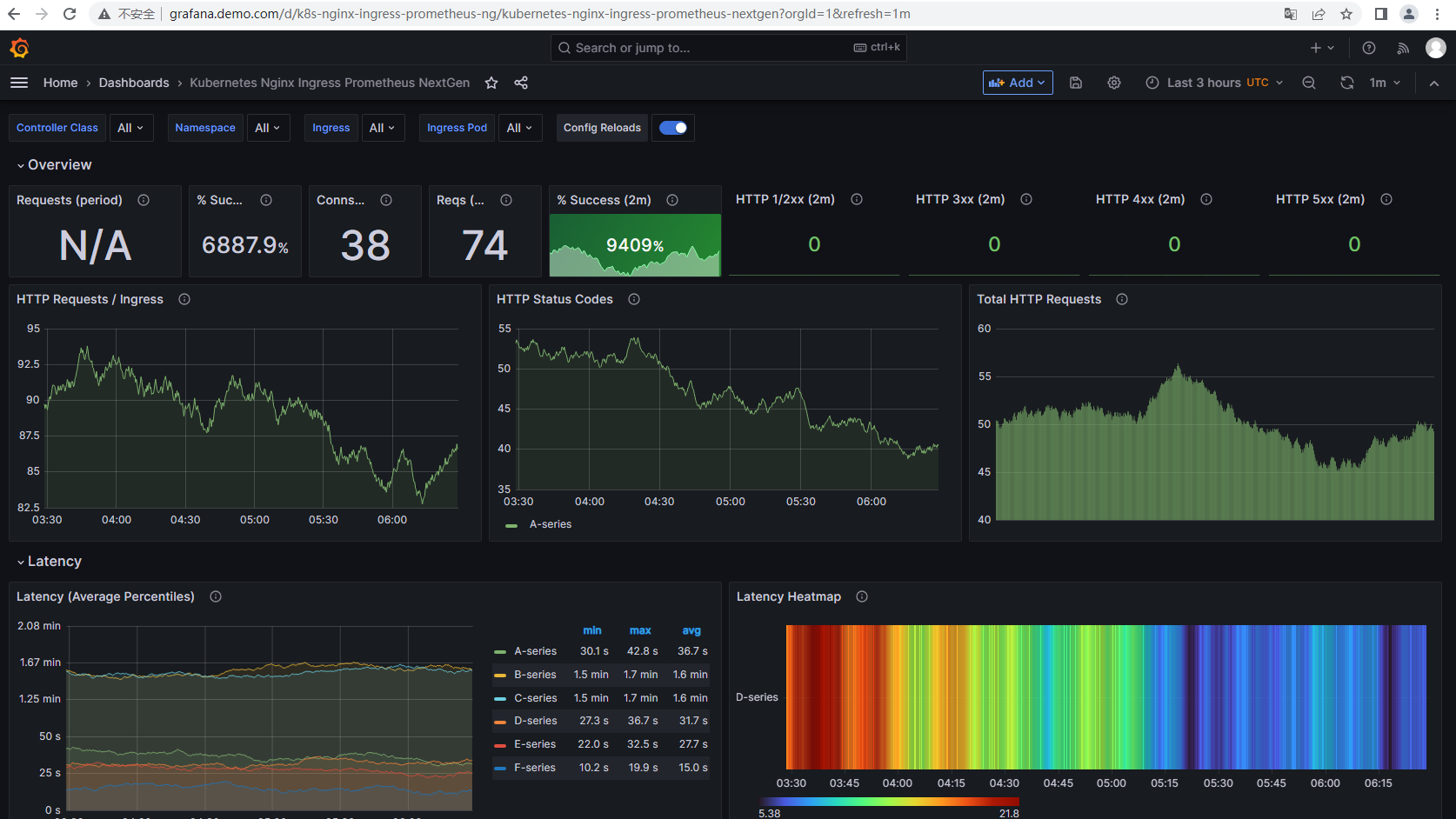

在grafana中添加模板即可,如下

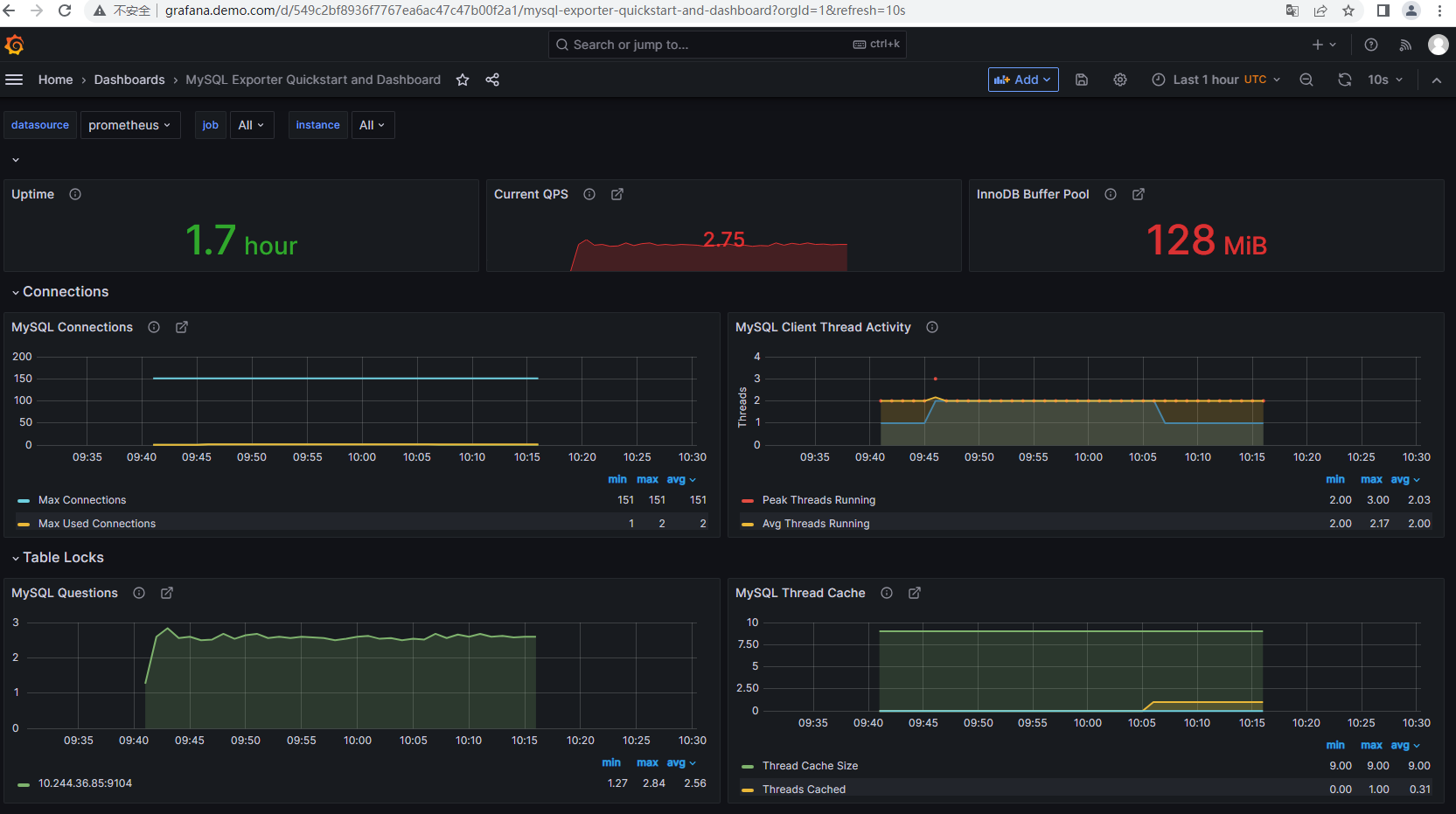

7.3.2.4 mysql Metrics

拉取mysqld-exporter并转私仓harbor.demo.com/exporter/mysqld-exporter:latest 。

# docker pull bitnami/mysqld-exporter

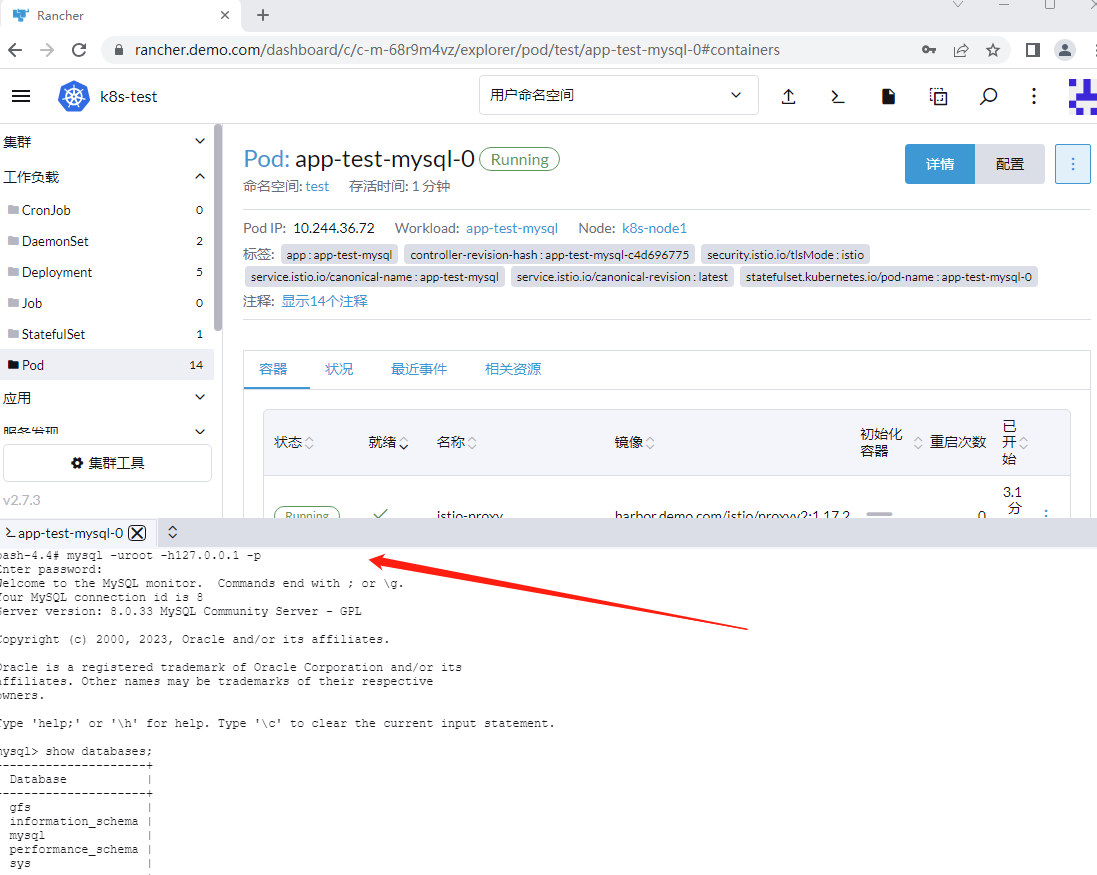

开启数据库,采用有状态部署方式

# cat mysql.yaml

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: app-test-mysql

namespace: test

spec:

serviceName: app-test-mysql

selector:

matchLabels:

app: app-test-mysql

replicas: 1

template:

metadata:

name: app-test-mysql

namespace: test

labels:

app: app-test-mysql

spec:

containers:

- name: mysql

image: harbor.demo.com/app/mysql:latest

imagePullPolicy: IfNotPresent

volumeMounts:

- name: mysql-data

mountPath: /var/lib/mysql

ports:

- name: port-test-01

containerPort: 3306

protocol: TCP

env:

- name: MYSQL_ROOT_PASSWORD

value: abc123456

args:

- --character-set-server=gbk

volumeClaimTemplates:

- metadata:

name: mysql-data

spec:

accessModes: [ "ReadWriteOnce" ]

storageClassName: csi-rbd-sc

resources:

requests:

storage: 5Gi

---

apiVersion: v1

kind: Service

metadata:

labels:

app: app-test-mysql

name: app-test-mysql

namespace: test

spec:

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

selector:

app: app-test-mysql

ports:

- name: port01

port: 3306

targetPort: 3306

protocol: TCP

type: ClusterIP

mysql库创建相应用户并赋权

use mysql;

create user 'admin'@'%' identified with mysql_native_password by '123456';

grant ALL on *.* to 'admin'@'%' with grant option;

flush privileges;

开启exporter,默认采用9104端口来收集metrics

# cat exporter.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: app-test-mysql-exporter

namespace: test

spec:

selector:

matchLabels:

app: app-test-mysql-exporter

replicas: 1

template:

metadata:

name: app-test-mysql-exporter

namespace: test

labels:

app: app-test-mysql-exporter

spec:

containers:

- name: mysqld-exporter

image: harbor.demo.com/exporter/mysqld-exporter:latest

imagePullPolicy: IfNotPresent

ports:

- name: port-test-02

containerPort: 9104

protocol: TCP

env:

- name: DATA_SOURCE_NAME

value: 'admin:123456@(app-test-mysql.test.svc:3306)/'

---

apiVersion: v1

kind: Service

metadata:

labels:

app: app-test-mysql-exporter

name: app-test-mysql-exporter

namespace: test

spec:

sessionAffinity: ClientIP

sessionAffinityConfig:

clientIP:

timeoutSeconds: 10800

selector:

app: app-test-mysql-exporter

ports:

- name: mysql-exporter

port: 9104

targetPort: 9104

protocol: TCP

type: ClusterIP

创建sm

# cat sm.yaml

apiVersion: monitoring.coreos.com/v1

kind: ServiceMonitor

metadata:

labels:

app: app-test-mysql-exporter

name: app-test-mysql-exporter

namespace: test

spec:

jobLabel: app.kubernetes.io/name

endpoints:

- interval: 10s

port: mysql-exporter

path: /metrics

scheme: http

selector:

matchLabels:

app: app-test-mysql-exporter

namespaceSelector:

matchNames:

- test

则在prometheus中可以发现添加的ServiceMonitor已被识别。

在grafana中添加模板即可,如:

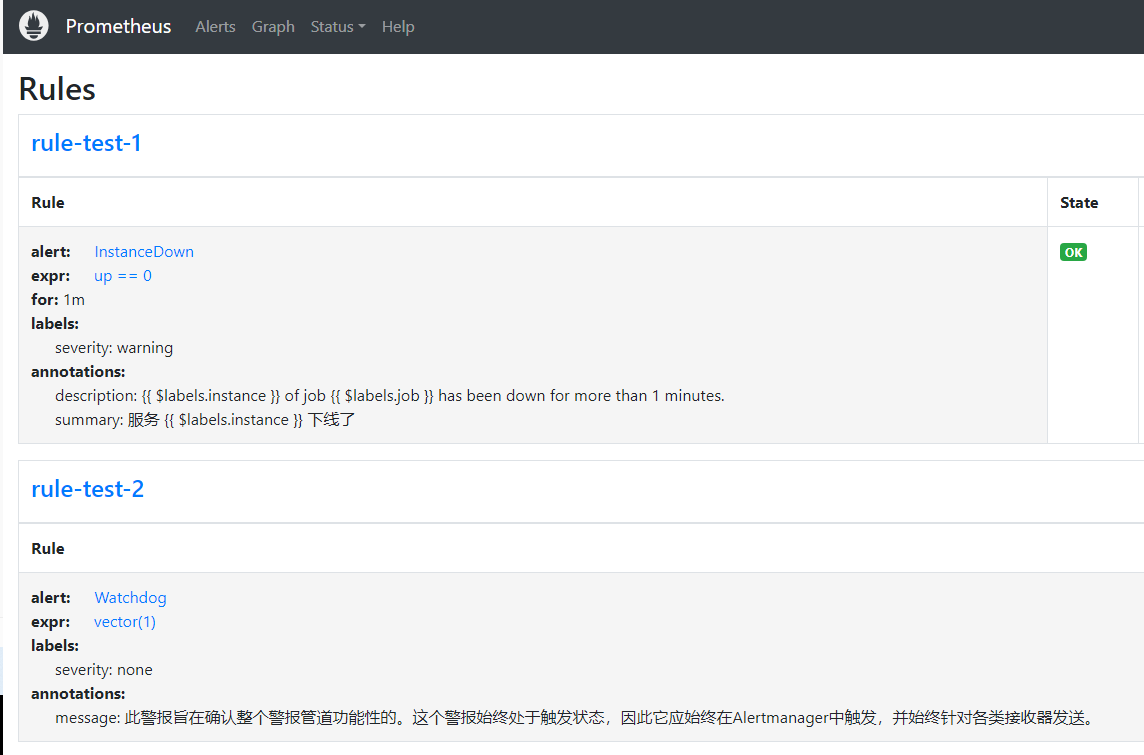

7.3.3 配置rule和告警

7.3.3.1 配置rule

查看kube-prometheus安装时提供的规则

# kubectl -n monitoring get prometheusrules

NAME AGE

alertmanager-main-rules 22h

grafana-rules 22h

kube-prometheus-rules 22h

kube-state-metrics-rules 22h

kubernetes-monitoring-rules 22h

node-exporter-rules 22h

prometheus-k8s-prometheus-rules 22h

prometheus-operator-rules 22h

为方便查看测试效果,可删除默认安装的规则.

添加测试规则

# cat rule1.yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: rule-test-1

namespace: monitoring

spec:

groups:

- name: rule-test-1

rules:

- alert: InstanceDown

expr: up == 0

for: 1m

labels:

severity: warning

annotations:

summary: "服务 {{ $labels.instance }} 下线了"

description: "{{ $labels.instance }} of job {{ $labels.job }} has been down for more than 1 minutes."

# cat rule2.yaml

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

labels:

prometheus: k8s

role: alert-rules

name: rule-test-2

namespace: monitoring

spec:

groups:

- name: rule-test-2

rules:

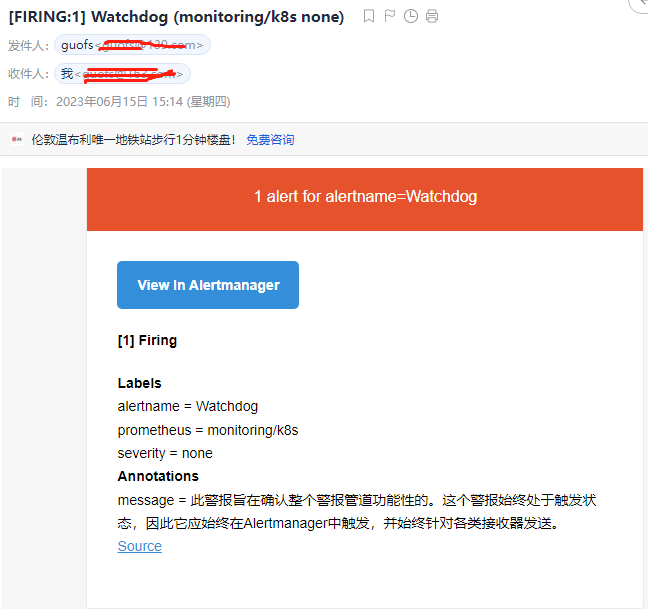

- alert: Watchdog

annotations:

message: |

此警报旨在确认整个警报管道功能性的。这个警报始终处于触发状态,因此它应始终在Alertmanager中触发,并始终针对各类接收器发送。

expr: vector(1)

labels:

severity: none

# kubectl create -f rule1.yaml -f rule2.yaml

# kubectl -n monitoring get prometheusrules

NAME AGE

rule-test-1 87m

rule-test-2 32m

7.3.3.2 配置alert

# cat /tmp/alert.conf

global:

smtp_smarthost: 'smtp.139.com:25'

smtp_from: 'guofs@139.com'

smtp_auth_username: 'guofs@139.com'

smtp_auth_password: 'abc1239034b78612345678'

smtp_require_tls: false

route:

group_by: ['alertname']

group_wait: 30s

group_interval: 5m

repeat_interval: 1h

receiver: 'default-receiver'

receivers:

- name: 'default-receiver'

email_configs:

- to: 'guofs@163.com'

inhibit_rules:

- source_match:

severity: 'critical'

target_match:

severity: 'warning'

equal: ['alertname', 'dev', 'instance']

# kubectl -n monitoring create secret generic alertmanager-main --from-file=alertmanager.yaml=/tmp/alert.conf --dry-run -o yaml | kubectl -n=monitoring create -f -

重启pod,以便配置生效。

kubectl -n monitoring delete pod alertmanager-main-0

查看日志

# kubectl -n monitoring logs pod/alertmanager-main-0 -f

收到告警邮件如下